Illustration by Jocelyn Urbina

Computer Science Students Explore Racial Profiling by Oakland Police

December 30, 2020

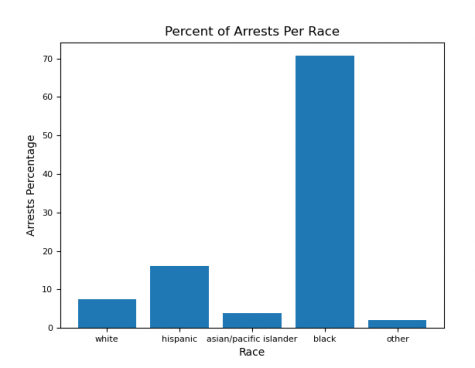

A starkly disproportionate number of Blacks are arrested in the course of traffic stops by police in Oakland, according to a new analysis of police department data conducted by the Advanced Computer Science class as its final project for the fall semester.

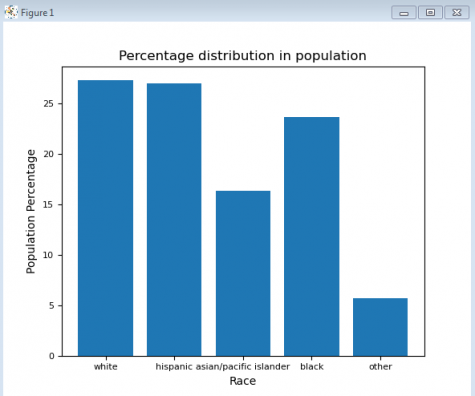

The analysis of publicly available reports on traffic stops made from 2013-2017 revealed that, although the population of Oakland is roughly 24% Black, almost 60% of drivers pulled over by police were Black, and Black drivers accounted for 70% of those arrested as a result of the traffic stop.

The Advanced Computer Science class used the data to hone their computer analysis skills while examining whether the data would reveal possible evidence of racial profiling in the police activity.

“Our knowledge is expanding with what we can do with computer science. In the beginning, when we had barely started, we learned that computer science was using code to solve real-world problems so developing these skills is helping us put it into good use,” said sophomore Angie Perez, one of the Advanced CS students who focused on the arrest data in particular.

Since data analysis is a rather new and popular thing in computer science, CS teacher Anil Vempati has been providing students real-world data to analyze regarding problems in society, such as climate change and policing. In the aftermath of the killing of George Floyd and the Black Lives Matter movement, Anil saw a great opportunity for students to learn computer science skills while examining the data on traffic stops, compared it with the overall demographics of the city’s population.

“It’s current, and it’s perfect for us,” Anil said via Zoom. “We analyze some data anyway, so might as well analyze something current and relevant.”

Anil said he chose this topic not only for its relevance, but also to help students use data to separate fact from fiction. With coding skills, the students could dig deeper into the question of whether police traffic stops actually reveal a racial bias, which could be a sign of racism.

“How do you separate that?” Anil said. “The best way that I know, and a lot of people know, a lot are thinking of is, ‘Well, why don’t we just gather data?’”

Anil’s inspiration came from the Stanford Open Policing Project, a project that started in 2015 to analyze traffic stop records from police departments and state patrol agencies nationwide, ranging from December 1999 to 2018. After processing the data collected from the states, Stanford made it available to the public, to study for signs of racial bias in policing. Anil downloaded the data and provided it to his students, and they worked in teams to analyze the data in different ways.

First, the students split into teams of two to work on the initial phases of the project, which involved preparing the data for Oakland from March 2013 through December 2017. Then, the pairs chose which aspects to explore more deeply using their coding skills.

Angie and sophomore Stephany Urbina worked as partners and chose to analyze the data on arrests made after a traffic stop. They wanted to see if the proportions of arrests made matched the racial demographics of the city overall. They made graphs of their results, revealing the sharp difference buried in the data.

Some 60% of the traffic stops involved Black drivers, and among those, 70% were then arrested. This compares to, for example, Hispanics who accounted for 27% of the population and roughly 20% of the traffic stops and 17% of the arrests resulting from traffic stops.

Analyzing real-world problems, such as racial biases in policing, gave a more significant meaning to computer science itself, they said.

“I went into computer science thinking, I’m just going to try this out because it’s something new, I might like it,” Stephany said. “But I never expected us to come as far as processing this much data using data analysis. It opens my eyes to how many careers you can get within computer science.”

Although the Stanford Open Policing Project has provided evidence of systemic racial bias in policing, it does not prove a firm conclusion of racial bias because there are more aspects in the data to look into, such as the original reasons for the stops and what may have happened during the stops.

Other teams in the Computer Science class dug into different aspects of the data, such as the gender breakdown of traffic stops, or the ultimate result of the stops — whether arrests or citations or other outcomes.